Address

304 North Cardinal St.

Dorchester Center, MA 02124

Work Hours

Monday to Friday: 7AM - 7PM

Weekend: 10AM - 5PM

Address

304 North Cardinal St.

Dorchester Center, MA 02124

Work Hours

Monday to Friday: 7AM - 7PM

Weekend: 10AM - 5PM

You can absolutely customize your SOAR automation workflows, and you probably should. Out-of-the-box playbooks are like a suit bought off the rack. It might fit okay, but it won’t feel like yours. The real power comes from tailoring those workflows to your specific tools, your team’s processes, and the unique threats your organization faces.

We’ve seen it time and again, a generic phishing playbook might miss a critical step in your email security setup, while a custom-built one acts like a precision instrument. This process isn’t about writing complex code, it’s about thoughtful design.

It starts with understanding what you have and what you need to protect. The rest is a matter of mapping and building. Keep reading to learn how to shape your SOAR into a true extension of your security team.

The biggest hurdle teams face isn’t the technical act of customization, it’s knowing where to begin. The sheer number of integration connectors and potential playbook task sequences can be paralyzing. You look at a blank playbook builder interface and wonder how to fill it.

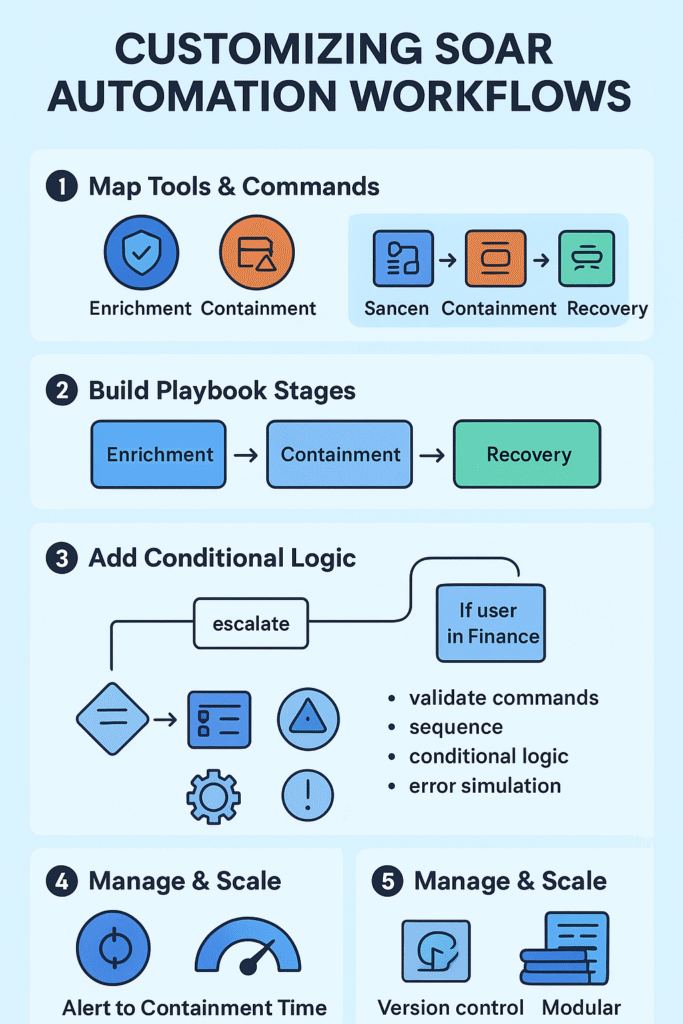

We find the most effective method is to ignore the blank canvas at first. Start with a spreadsheet or a whiteboard. List every security tool you have connected to your SOAR platform. Next to each tool, write down the specific actions, the integration commands, it can perform. For an endpoint tool, that might be isolate_device or initiate_scan. For your firewall, it could be block_ip_address. This inventory becomes your palette of colors.

This approach forms the foundation for a truly custom workflow where security orchestration automation and response emerges organically from the tools you already use and the actions they can take. You can’t paint a picture until you know what colors you have to work with.

Once you have your commands, the next step is organization. Group them into functional categories. Which commands are for enrichment, for gathering more data? Which are for containment, for stopping a threat in its tracks? And which are for recovery, putting things back to normal? This simple act of categorization starts to impose order on the chaos. It reveals the natural stages of your future playbook.

With your commands categorized, you then identify your key artifacts. These are the critical data points that flow through your security incidents. A device ID, a user ID, an IP address, a file hash. Your environment dictates what’s most important.

If you’re an MSSP, you might standardize on a core set of artifacts across all your clients for consistency. The goal is to map your commands directly to these artifacts. You’re essentially asking, “What can I do with this device ID?” The answers form the backbone of your playbook logic.

You can almost see the whiteboard in your head when you open the playbook builder. The layout shouldn’t surprise you, because you already sketched it out earlier. Now you’re just turning that sketch into something real, one stage at a time.

The enrichment stage is your information-gathering phase, the part where the alert stops being just a blinking light and starts to look like a story you can actually follow. Here, the playbook automatically pulls context from your systems without an analyst clicking through ten consoles [1]. For example, it might:

All this happens in the background. No one has to touch a keyboard for the playbook to build a complete picture around a single alert. That’s the jump from a raw alert to a qualified incident, where the analyst isn’t asking, “What is this?” but, “What do we do next?”

The containment stage is where actions start to impact people and systems, and that’s where you want to be careful, especially early on. When you’re first rolling out automation, or when the action could be highly disruptive, it makes sense to add workflow approval gates. The playbook can line up a proposed response and then pause for a human to approve it.

This staged automation keeps speed and control in balance, which is exactly what a managed SOAR platform is designed to support—automation moves incidents forward while human oversight steps in only where the risk justifies it. For example, you might require approval workflows or conditional gating before isolation, blocking, or account suspension actions are executed.

For instance, it might:

In those cases, the playbook can surface a button: someone has to click “approve” before the action runs. As your trust in the logic grows (and your false positive rate falls), you can start automating more of these steps.

At higher confidence levels, the playbook might:

So containment slowly shifts from “suggest and wait” to “act quickly when the evidence is strong.”

Recovery is the quiet part after the alarm stops blaring, and it’s the stage many teams skip or rush through. Stopping the threat is only half the job; you also have to undo the side effects of your own defensive moves.

A well-tuned playbook should include recovery steps that:

This is how you handle false positives without making everyone hate security. Someone’s account gets locked, or their laptop goes offline, and once you confirm it wasn’t malicious, the playbook can help put everything back the way it was with minimal delay.

Case management shouldn’t be its own separate island either. It should be threaded through every stage:

That way, when the incident is done, operations return to normal quickly, and you’re left with a clean record of what happened, why you did what you did, and how you might refine the playbook next time.

You really see automation come alive when it stops being a straight line and starts making decisions.

Conditional logic is what turns a plain, linear playbook into something that behaves more like an analyst. Instead of always marching through the same steps, A, then B, then C, the playbook can pause and ask, “What’s true right now?” and then act based on that answer.

Some simple but powerful examples:

This branching logic lets a single playbook cover many variations of the same incident type. One workflow, many paths. That makes the automation more adaptable, instead of forcing you to build a new playbook for every small exception.

Of course, once you have branches, you also have more ways things can break. That’s why testing each logic path matters as much as writing it. A playbook testing simulator (or lab environment) lets you:

Good conditional logic isn’t just clever, it’s predictable and tested.

For an MSSP, this kind of customization isn’t nice-to-have. It’s the job.

Every client brings a different mix of tools, policies, and risk tolerance. Some are fine with blocking fast and asking questions later. Others need layered approvals for almost everything. A rigid, one-size playbook just falls apart in that kind of variety.

So we design our playbooks to bend without breaking, using:

That way, we can keep a strong, shared core, say, a phishing response playbook, and still adapt the details for each environment. For example:

Underneath all that, playbook data mapping does the quiet but essential work: making sure the right fields, tags, and case details land in the right place for each client’s systems. Same incident logic, different wiring per environment.

That’s how you get scale without losing precision.

Before any custom playbook sees production traffic, it needs rigorous testing. This isn’t just about functionality, it’s about safety. A poorly tested playbook can cause more damage than the threat it was designed to stop. We always start in a dedicated sandbox environment that mirrors production as closely as possible.

The testing process should be methodical. First, you validate each individual task. Does the isolate_device command actually work with the correct device ID artifact? Then you test the playbook task sequencing. Does the enrichment complete before the containment actions fire? Finally, you need to stress-test your conditional logic with various inputs. What happens when a critical artifact is missing? Playbook error handling isn’t an afterthought, it’s a primary feature.

We recommend creating a standard test suite for your playbooks. This might include a set of fake incidents with known-good data, edge cases with missing information, and scenarios designed to trigger approval gates. Running this suite before deployment catches the majority of issues. The playbook debugging tools in modern SOAR platforms are invaluable here, showing you exactly where data transforms happen and where decisions are made [2].

You can tell when playbooks move from “experiment” to “infrastructure” because the real work becomes keeping them healthy, not just getting them to run. Once your custom playbooks are live, the focus shifts to management and steady improvement. At that point, playbook versioning control isn’t optional. It’s the safety net.

This is a core principle in outsourced security automation orchestration, where playbooks are treated as living infrastructure that must remain consistent, auditable, and reliable across multiple clients and environments.

We treat playbooks like code, even in a low-code interface. That means:

If a new version behaves badly, skips a step, loops, or causes noisy alerts, you don’t want to troubleshoot in production with no way out. Versioning lets you hit “undo” at scale.

Once the logic is in place, the next question is: Is it actually working well?

Monitoring playbook health is an ongoing job. You’re not just checking if it runs, but how it runs. Useful areas to watch:

From those metrics, you might decide to:

The goal isn’t just automation for its own sake, it’s fast, reliable response that you can trust under pressure.

Credits : CrowdStrike

For an MSSP, the scaling problem shows up fast. You can’t manually babysit hundreds of totally unique playbooks across clients, no matter how dedicated the team is.

So the strategy has to change. You build for reuse.

A practical way to handle this is to lean on modular playbook design, using:

With this modular approach, you can:

So you get both scale and flexibility: reusable building blocks at the core, and client-specific playbooks assembled on top of them.

You can shape steps in your playbook so your team handles alerts the same way every time. You can use SOAR playbook customization with automation workflow design and security orchestration playbook features. You can add custom playbook scripts and playbook task sequencing to guide each step. You can also use artifact enrichment workflows to help you act faster.

You can guide actions with simple rules in the SOAR rule engine. You can use conditional playbook logic to move tasks when something changes. You can use playbook conditional tasks and dynamic playbook branching to make flows smart. You can add workflow approval gates to stop risky actions. You can check steps with the playbook testing simulator.

You can link tools with SOAR integration connectors. You can pull data into playbook parameter inputs and playbook data mapping. You can use SOAR API integrations to run custom SOAR actions across systems. You can move alerts with SOAR alert processing. You can add SOAR cloud connectors when you need outside data.

You can read playbook execution logs to see what broke. You can use playbook debugging tools to test each task. You can use playbook validation rules to catch errors early. You can check playbook loop structures and playbook sub-workflows. You can track workflow performance metrics so you know what slows the process.

You can store changes with playbook versioning control. You can use playbook collaboration features so teams edit safely. You can share playbook reuse libraries and incident playbook templates. You can run playbook import export when you move work. You can use playbook rollback mechanisms if updates fail. You can watch playbook health monitoring to keep flows running smoothly.

Customizing SOAR workflows works best when you move step by step. You start with a basic playbook. You deploy it. You review the execution logs and performance metrics. You identify bottlenecks. You note where analysts still intervene. You refine it.

Modern SOAR platforms give you safe version control. You test new versions in a sandbox, then push them to production. You expand automation gradually. You free your team to focus on threats that need human judgment.

Your playbooks evolve as your tools and threats change. Everything starts with choosing the first artifact you want to automate.

If you want expert support to build more efficient workflows, you can join today here.